Just the Beginning: Chicago-Kent Professor Demonstrates that GPT-4 Can Pass Bar Exam

It has been just a few months since the release of ChatGPT, one of the fastest-growing artificial intelligence consumer applications in history. In a paper released in December 2022, Chicago-Kent College of Law Professor Daniel Martin Katz evaluated GPT-3.5 (a close analog to ChatGPT) and its ability to take the multiple choice portion of the bar exam.

Although that version of the GPT program did not pass the exam, Katz made a prediction: it would be quite possible that a large-language model would pass the multiple-choice portion of the bar exam within the next 18 months.

Soon thereafter, Katz began to work on a project testing the next generation of AI: GPT-4. The results of this research were released on March 14, 2023, as part of the GPT-4 launch event.

The paper titled “GPT-4 Passes the Bar Exam”—co-authored with Michael Bommarito (Stanford CodeX/273 Ventures), Shang Gao (Casetext), and Pablo David Arredondo (Stanford CodeX/Casetext)—demonstrates that GPT-4 can not only pass the multiple choice portion of the exam, but it can pass all parts of the Uniform Bar Exam (UBE), including the essay portion and the performance test.

“After we wrote our other paper in December, people would tell me that they believed AI would eventually pass the multiple-choice portion. But lots of folks said, ‘Well it is never going be able to do the essays,’” Katz says.

Katz and his co-authors graded the essay and performance portions. Katz says that they did their best to try to grade it fairly, but the computer scored so well on the multiple-choice portion that there was a lot of room for error in the essay and performance sections.

The program’s performance on the essay section was notable. In fact, he says that there was only one clear indicator that the essay was, in fact, written by a computer.

“There were basically no typos. The grammar is near perfect,” he says. “In the real bar exam, folks are working quickly and so there are likely to be typos and grammar mistakes, even on exams that otherwise receive quite high scores.”

Previous models would “hallucinate,” or confidently provide answers that were clearly incorrect, giving away that they were not human. Katz says that’s less common with GPT-4. In many other ways, the writing seemed like it could have been written by a student. He says it even drifted from the main topic in a very human way.

“In several of the problems, ChatGPT and, to a much lesser extent, GPT-4 will talk about a topic that’s related, but not really relevant, for the question as it was posed. That’s kind of what a student would do,” he says. “When I was a student, it’s what I would have done if I didn’t totally know the answer. I’d write the closest thing possible and go with it and hope it worked. It’s a pattern I see all the time in real essay exams I grade as a professor.”

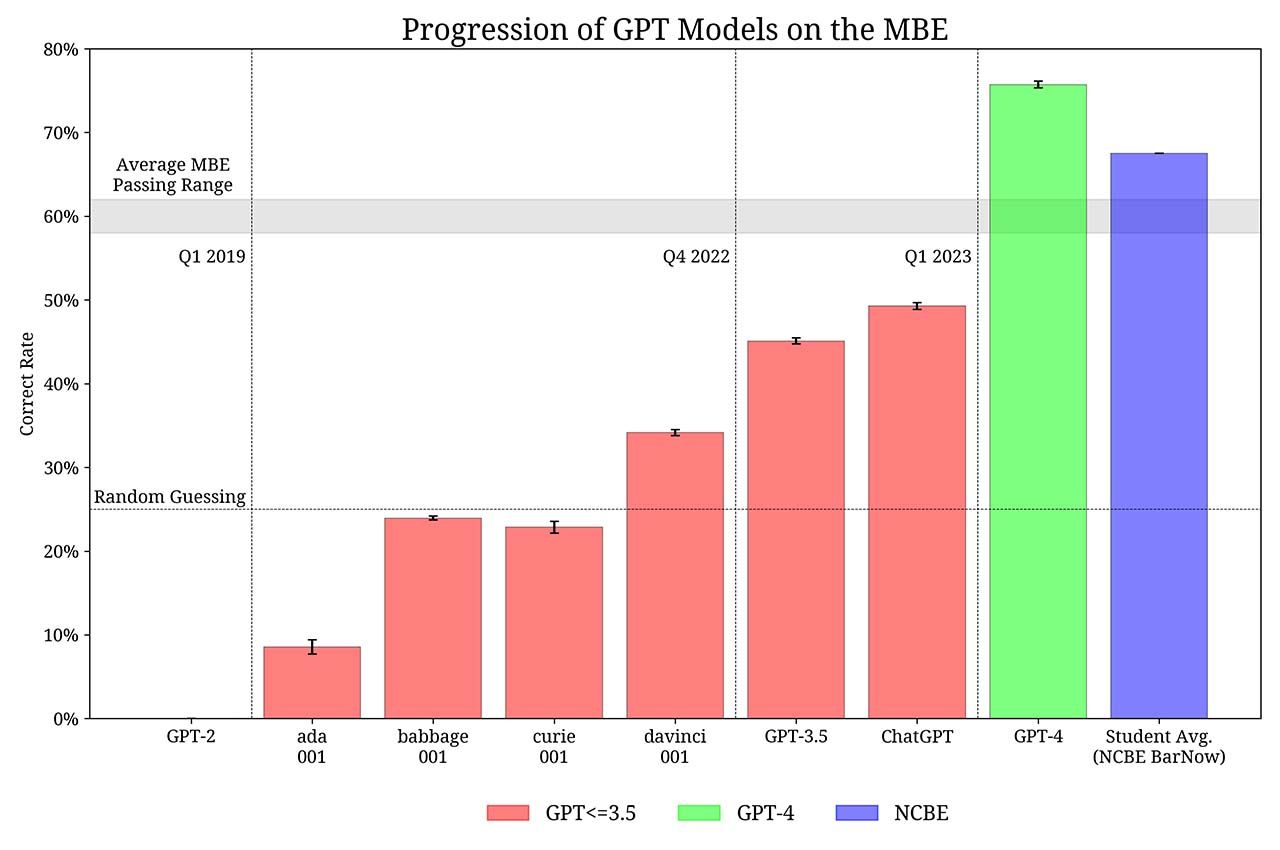

Less than three months ago, when Katz tested an earlier version of GPT, the software didn’t pass the multiple-choice portion, but it got reasonably close. He says the ensuing GPT versions have improved significantly in a short period of time.

“Four years ago, GPT-2 couldn’t even consistently process the questions. Up until GPT-3, which was two years ago, it’s barely beating statistical chance on the multiple choice,” he says.

Katz wasn’t surprised to see the model pass the multiple-choice section, but even he was shocked to see it pass the performance section (MPT), in which test takers complete legal tasks within the confines of fictional laws established for the question, not actual legal framework.

“It is difficult for people, too, to separate the law in the question from the broader law as folks might understand it—the test designers make you stay within the four corners of the problem as presented,” says Katz. “This shows that the software is able to, at a minimum, produce something akin to but not really fully the same as actual reasoning.”

After his original paper was published, Katz was approached to help test GPT-4 before it was released. He was excited to take part the opportunity.

In his tests, Katz used a pre-release version of the GPT-4 model.

“I feel very thankful to be a part of it. In the history of AI, GPT-4 is one of the most important systems that’s ever been developed,” he says. “We are excited to report the results, but the bigger picture here in not the exam, per se; it is highlighting the nature of the capabilities that are coming online here. The bar exam analysis is just a way of demonstrating what is possible.”

He expects to see GPT-4 and other models from other companies begin to be modified into different versions that can help people complete tasks across industries. More and more people will begin to interact with it in their real lives.

“I think this is going to be one of the most exciting years in the technology world in a long, long time. That’s panning out so far. We’re not even through the first quarter,” he says.

In the legal realm, GPT-4 is already on the market. One of Katz’s co-authors on the paper, Arredondo, is the co-founder and chief innovation officer at CaseText, a company launching CoCounsel—an AI tool for lawyers that is powered by GPT-4.

As these tools make their way into law offices across the country, Katz hopes their influence will reach beyond that. He believes that AI could be a “force multiplier” that will allow more people to access legal services that may have been too expensive before.

“People have certain rights, but they don’t know how to enforce them,” he says. “As a result, they don’t pursue the things that they could. They give up. They feel disempowered. I’m not saying this is going to solve all those problems, but it can help.”

Photo: chart showing progression of GPT models on the MBE (provided)